Mapping database tables to C++ classes is a common challenge. Let us try to map a database table person to a class.

using namespace boost::gregorian;

using namespace std::string;

class person {

public:

string first_name;

string last_name;

date date_of_birth;

int height;

float weight;

};

Under perfect conditions this would work just fine. But in practice when rolling out our database layer (with elements of RDBMS-2-OO) several problems arise. One of them is handing null values.

In C# we would use (...and strongly typed datasets do) nullable types.

public class Person {

public:

string FirstName;

string LastName;

date DateOfBirth;

int? Height; // Nullable type.

float? Weight; // Nullable type.

};

However there is no similar language construct in C++. So we will have to create it. Our todays' goal is to add built in value types the ability to be null. And to be able to detect when they are null.

We will create new types but we desire -just like in C#- that they behave like existing built in types.

So why not deriving from built in types?

class int_ex : int {

}

I've been thinking long and hard about the appropriate answer to this good question. And finally I came up with the perfect argument why this is a bad idea. It's because it won't compile.

Luckily we have templates. Let us start by declaring our class having a simple copy constructor.

template <typename T>

class nullable {

private:

T _value;

public:

// Copy constructor.

nullable<T>(T value) {

_value=value;

}

};

With this code we have wrapped built in value type into a template. Let us explore possibilities of this new type.

int n=10;

nullable<int> i=20; // This actually works!

nullable<int> j=n; // This too.

nullable<int> m; // This fails because we have no default constructor.

This is a good start. Since we have not defined a default constructor and we have defined a copy constructor the compiler hasn't generated the default constructor for us. Thus last line won't compile. Adding the default constructor will fix this. The behavior of the ctor will be to make value type equal to null. For this we will also add _is_null member to our class. Last but not least we will add assignment operator to the class.

template <typename T>

class nullable {

private:

T _value;

bool _is_null;

public:

// Copy ctor.

nullable<T>(T value) {

_value=value;

_is_null=false;

}

// Default ctor.

nullable<T>() {

_is_null=true;

}

// Assignment operator =

nullable<T>& operator=(const nullable<T>& that) {

if (that._is_null) // Make sure null value stays null.

_is_null=true;

else {

_value=that._value;

_is_null=false;

}

return *this;

}

};

Now we can do even more things to our class.

int n=10;

nullable<int> i=20; // Works. Uses copy constructor.

nullable<int> j; // Works. Uses default ctor. j is null.

j=n; // Works. Uses copy constructor + assignment operator.

j=i; // Works. Uses assignment operator.

Next we would like to add the ability to assign a special value null to variables of our nullable type. We will use a trick to achieve this. We will first create a special type to differentiate nulls' type from all other types. Then we will add another copy constructor further specializing template the null type.

class nulltype {

};

static nulltype null;

template <typename T>

class nullable {

private:

T _value;

bool _is_null;

public:

// Assigning null.

nullable<T>(nulltype& dummy) {

_is_null=true;

}

// Copy ctor.

nullable<T>(T value) {

_value=value;

_is_null=false;

}

// Default ctor.

nullable<T>() {

_is_null=true;

}

// Assignment operator =

nullable<T>& operator=(const nullable<T>& that) {

if (that._is_null) // Make sure null value stays null.

_is_null=true;

else {

_value=that._value;

_is_null=false;

}

return *this;

}

};

Let us further torture our new type.

nullable<int>m=100; // Works! m=100!

nullable<int>j; // Works! j is null.

nullable<int>n=j=m; // Works! All values are 100.

nullable<int>i=null; // Works! i is null.

Our type is starting to look like a built in type. However we still can't use it in expressions to replace normal value type. So let's do something really dirty. Let us add a cast operator into type provided as template argument.

// Cast operator.

operator T() {

if (!_is_null)

return _val;

}

Automatic casts are nice and they work under perfect conditions. But what if the value of nullable type is null? We'll test for the null condition in our cast operator and throw a null_exception.

Throwing an exception is an act of brutality. We would like to allow user to gracefully test our type for null value without throwing the exception. For this we will also add is_null() function to our class.

class null_exception : public std::exception {

};

class null_type {

};

static null_type null;

template <typename T>

class nullable {

private:

T _value;

bool _is_null;

public:

// Assigning null.

nullable<T>(null_type& dummy) {

_is_null=true;

}

// Copy ctor.

nullable<T>(T value) {

_value=value;

_is_null=false;

}

// Default ctor.

nullable<T>() {

_is_null=true;

}

// Assignment operator =

nullable<T>& operator=(const nullable<T>& that) {

if (that._is_null) // Make sure null value stays null.

_is_null=true;

else {

_value=that._value;

_is_null=false;

}

return *this;

}

// Cast operator.

operator T() {

if (!_is_null)

return _value;

else

throw (new null_exception);

}

// Test value for null.

bool is_null() {

return _is_null;

}

};

We're almost there. Now the following code will now work with new nullable type.

nullable<int> i; // i is null

nullable<int> j=10;

i=2*j; // i and j behave like integer types.

j=null; // you can assign null to nullable type

if (j.is_null()) { // you can check j

int n=j+1; // Will throw null_exception because j is null

}

There are still situations in which nullable types do not act or behave like the built in value types.

// This will fail...

for(nullable<int> i=0; i<10;i++) {

}

To fix this we need to implement our own ++ operator.

// Operator ++ and --

nullable<T>& operator++() {

if (!_is_null) {

_value++;

return (*this);

} else

throw (new null_exception);

}

nullable<T> operator++(int) {

if (!_is_null) {

nullable<T> temp=*this;

++(*this);

return temp;

} else

throw (new null_exception);

}

This is it. We have developed this type to a point where it serves our purpose. To enable us to write database layer.

using namespace boost::gregorian;

using namespace std::string;

class person {

public:

string first_name;

string last_name;

date date_of_birth;

nullable<int> height;

nullable<float> weight;

};

There's still work to be done. Implementing remaining operators (such as ==). Finding new flaws and differences between our type and built in types. I leave all that to you, dear reader. If you extend the class please share your extensions in the comments section for others to use. Thank you.

Categories c++ , compilation , patterns

I expected most of my readership to come from Africa, India and Asia. But they are from Silicon Valley, California.

I have always wanted to know how ./configure does its magic. To a Gnu newbie the Gnu Build System seems utterly complex. Available manuals for Autoconf, Automake and Libtool are several hundred pages of difficult-to-read text. Learning curve is steep. Way too steep to produce a simple script!

But then -last week as I googled around- I found these gems:

- Using Automake and Autoconf with C++

- Using C/C++ libraries with Automake and Autoconf

- Building C/C++ libraries with Automake and Autoconf

These make understanding basic path of execution much easier and are an excellent pre-read before feeding your brain with official manuals.

Categories c++ , compilation , gnu , tools

Categories c++ , compilation , gnu , tools

Coming from Windows background I was a bit worried about the complexity of accessing Postgres databases from C++. I'm still having nightmares of early ODBC programming back in the 90ties. But as a mature developer I quickly overcame Microsoft's fixation on building the mother of all DBMS access technologies; that is - a common database mechanism to access various DBMSes.

Seriously, how many times in life have you ported a database from MS SQL Server to Oracle? There is a brilliang library for accessing Postgres (and only Postgres!) from C++ available. It is pqxx. It comes with a tutorial to help you getting started.

Here is Hello World of libpqxx. It doesn't get any simpler then that. Following code executes a query on a database.

#include <pqxx/pqxx>

using namespace pqxx;

void execute_query() {

connection cn("dbname=my_database");

work w(cn, "mytransaction");

w.exec("INSERT INTO city(city_name) VALUES ('Ljubljana');");

w.commit();

}

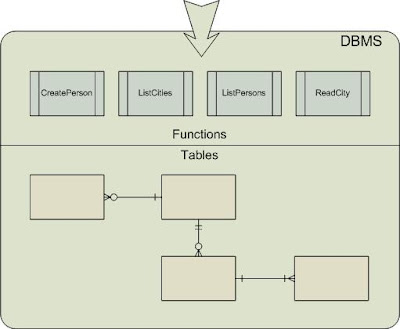

And now for something a bit more complex. Today we're going to discover the art of creating a stored procedure / function layer in Postgres database.

Let us first agree on terminology. In Postgres there is no difference between a stored procedure and a function. A procedure is merely a function returning void. Thus from now on we will only use term function.

Just in case you wonder - as a mature DBMS Postgres prepares (precompiles) all functions for optimal performance, exactly as its commercial competitors.In contemporary databases functions are commonly used to implement security layer. It is generally easier to give a user permission to execute a function that manipulates many database tables to complete a business operation then it is to assign him or her just the right permissions on all involved database tables for the same result.

Therefore modern designs introduce an additional layer of abstraction to access database tables. This layer is implemented via functions. In such scenario users can access database tables only through functions. They have no direct access to database tables.

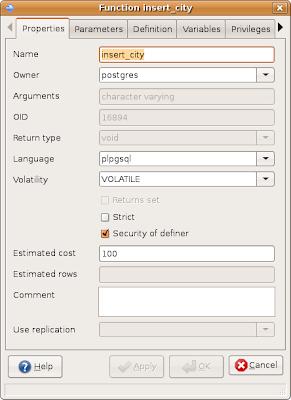

To implement this design we need to know more about the Security of definer concept.

Security of definer

In Postgres a function can be defined to have “Security of definer” property set. You can set this propety in pgAdmin (see the screenshot bellow).

To set this property manually add SECURITY DEFINER to the end of function definition like this -

CREATE FUNCTION insert_city(character varying)

RETURNS void AS

$BODY$

BEGIN

INSERT INTO city("name") VALUES($1);

END

$BODY$

LANGUAGE 'plpgsql' VOLATILE SECURITY DEFINER;

Adding this to a function will make it run under the context of the owner of the function instead of context of the caller of the function. This allows a user to insert city into a table without having insert permission on it. As long as he has permission to execute this function and the owner of the function has permission to insert into city table. It is a way to implement chained security on Postgres.

Postgres functions that return datasets

Postgres functions that return data sets are poorly documented and their syntax might look a bit awkward to those of you used to SQL Server. When a function in Postgres returns multiple results it must be declared to return SETOF some type. Call syntax for such functions is a bit different then what you are used to. You call it using SELECT * FROM function() instead of usual SELECT function() call.

SELECT function_returning_setof(); -- Wrong!

SELECT * FROM function_returning_setop(); -- OK!

SELECT function_returning_scalar(); -- OK

Let's imagine we have a table of all cities with two fields – city_id of type SERIAL, and name of type VARCHAR(80). Actually we're imagining this througout this article.

Here is a code fragment showing how to write a function returning all cities.

CREATE FUNCTION list_all_cities()

RETURNS SETOF city AS

$$

DECLARE

rec record;

BEGIN

FOR rec IN (SELECT * FROM city) LOOP

RETURN NEXT rec;

END LOOP;

END;

$$ LANGUAGE plpgsql;

-- Call the function.

SELECT * FROM list_all_cities();

This code is using generic type called RECORD. Try experimenting with %ROWTYPE to obtain same results. Function above returns type SETOF city - each record in the set has the same structure as a record of city table. But what if you wanted to return another structure? One way is to create a type like this -

CREATE TYPE list_all_city_names_result_type AS (city_name varchar(80));

CREATE FUNCTION list_all_city_names()

RETURNS SETOF list_all_city_names_result_type AS

$$

DECLARE

rec record;

BEGIN

FOR rec IN (SELECT "name" FROM city) LOOP

RETURN NEXT rec;

END LOOP;

END;

$$ LANGUAGE plpgsql;

SELECT * FROM list_all_city_names();

If this imposes too harsh limitations then you can also use the generic RECORD data type as return type and tell the procedure what to expect when calling it.

CREATE FUNCTION list_all_city_names2()

RETURNS SETOF RECORD AS

$$

DECLARE

rec record;

BEGIN

FOR rec IN (SELECT "name" FROM city) LOOP

RETURN NEXT rec;

END LOOP;

END;

$$ LANGUAGE plpgsql;

SELECT * FROM list_all_city_names2()

AS ("city_name" varchar(80)); -- Expect varchar(80)

There's one more trick. I don't recommend it but I'm publishing it anyways. If you'd like to make things really simple for you then you could use another language instead of plpgsql. For example, using sql code you can write queries directly into your function like this -

CREATE FUNCTION getallzipcodes()

RETURNS SETOF zip AS

$BODY$

SELECT * FROM zip;

$BODY$

LANGUAGE 'sql';

Stored procedure layer and automatic code generation

When I create stored procedure layer I like to generate code for basic CRUD functions. I only write code for complex business functions by hand. Thus in my next article I am going to write code generator to do just that for Postgres/c++ pair.

But right now let's just explain some concepts. CRUD functions are - Create (new record from data), Read (given ID), Update (existing record given ID and new data) and Delete (given id) for single table. These functions are needed for every table. For example for table city you would have following functions:

- create_city(name),

- read_city(id),

- update_city(id,new_name),

- delete_city(id).

Two more types of functions are often needed. Get functions and list functions (sometimes called find functions). The difference between get and list function is that a get function always return single result. For example get_city_id_by_name(name) or get_user_by_phone(phone). Whereas list function returns all records that match the condition provided as parameters. For example - list_cities_starting_with(word) or list_persons_older_then(age) or list_all_countries().

Some people prefer list_ and other prefer find_ prefix for list functions. It is a matter of taste; as long as your programming stlye is consistent.

Table above shows functions that will be needed for almost any table and are therefore candidates for automatic code generation. We'll deal with code generation in one of our following articles.

Categories architectures , patterns , postgres , sql

If you would like to publish SQL or C++ code on your blog hosted by Blogger I recommend SyntaxHighlighter.

You can install the SyntaxHighlighter Blogger widget somewhere on your blog. I installed it at the bottom of this blog.

Use tag pre to publish your code. For example:

<pre name="code" class="SQL">

-- Sample

SELECT * FROM city;

</pre>

will produce this result

-- Sample

SELECT * FROM city;

Supported languages include c++, sql, c#, etc. All supported language tags can be found here.

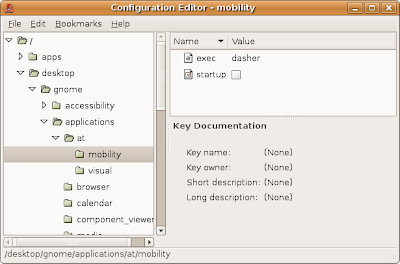

When in Unix you should really do as Unixans do. They store application configuration to the /etc folder and user preferences to user's home folder using dot (.) for first name of file.

So if your application is called maestro then it should store its' configuration to /etc/maestro.conf (or /etc/maestro/config.xml or /etc/maestro/app.ini - it doesn't matter as long as it is in the /etc). And it should store its' user preferences to ~/.maestro file (or to ~/.maestro/.profile.txt - as long as it is in users' folder, starts with a dot and the filename hints about its origin).

Even Gnomes' GConf (you may run it from cmd line using gconf-editor) follows this convention by creating a ~/.gconf file in your home folder.

Your application should also be configurable via environment variables and command line parameters. But what if a collision of settings occur? Then you should use following priorities - low) read config file, mid) check the environment variables, high) check command line parameters.

Configuration source with highest priority should prevail. For example - if an option is defined in config file and is also passed as a command line parameter the one passed at the command line should be used.

Easiest way to implement all this is to try to read each settings from config file, then from environment vars and then from cmd line overwriting it each time a new value is available. This way the last read setting will prevail. There are multiple libraries available to assist you with reading config files and parsing command line parameters. Though options are many for C++ I recommend using Boost.Program_options. It will provide the user of your command line tool with experience he is familiar with including command line script files. I explained my preference for the Boost library here.

1. Casing conventions

These are compatible with pgAdmin generated code so I recommend you stick to them.

2. Identing rules

2.1. Set tab size to 8 (spaces).

2.2. Make it possible to comment out (using --) delimiters and operators (such as comma, AND, OR, JOIN, etc.) by putting them at the beginning of a row.

2.3. When dividing statements to multiple rows ident them to emphasize individual statements' hierarchy.

2.4. Feel free to put entire SQL statement into single row if it will fit.

3. Academic sample from the internet

4. Real life sample (fragment!)

In the old days one called g++ compiler with a lot of command line switches to tell it where to find all include files and libraries. Nowadays civilized libraries register themselves with a tool called pkg-config. This tool knows how to generate all command line switches for you if you provide library name.

To list all libraries registered with the tool call

pkg-config –list-allTo see which libraries need to be linked with openssl library use

pkg-config –libs opensslThe tool will output: -lssl -lcrypto which are exactly the command switches you should provide to compiler. So if you wanted to link your sample with the openssl library you could write:

g++ -o sample sample.cpp `pkg-config --libs --cflags openssl`It really is that simple. Of course you immediately became interested in how exactly pkg-config does its magic, right? After all if you produce a library of your own you want it to be civilized and to register with pkg-config.

Here's how it's done. When you call pkg-config with a library name (for eample libpqxx) it first examines the contents of the /usr/lib/pkgconfig folder seeking for a file named libpqxx.pc. If it can't find it there it examines PKG_CONFIG_PATH variable repeating the search for libpqx.pc in each folder in this path.

After finding a match it extracts required library data. Here is the contents of libpqxx.pc file to get a feeling of how this works.

prefix=/usr/localAs you can see data in this description file is abundant. So after matching .pc file with the library pkg-config can easily generate output for command switches.

exec_prefix=${prefix}

libdir=${exec_prefix}/lib

includedir=${prefix}/include

Name: libpqxx

Description: a C++ API to the PostgreSQL database management system.

Version: 3.0

Libs: -L${libdir} -L/usr/lib -lpqxx

Cflags: -I${includedir} -I/usr/include/postgresql

So there. With tiny bit of new knowledge your library can now register itself properly with the system. You can also create .pc files for libraries that don't behave as good citizens to simplify compilation. Or even debug foreign make files that fail.

.NET spoiled us all. The Framework's Standard Library contains answers to numerous programming challenges. From implementing http client to reading from a database – a plethora of classes speeds up the development and unifies programming experience.

Nothing quite as complete exists for C++. The open source world offers many libraries but without consistency of .NET. Except for two - the C++ Standard Library and the Boost. The latter will soon become part of the former.

Thus start your C++/Unix programming voyage by reading about Boost. Install it on your system. You do not need to compile it on Ubuntu. Just use Synaptic Package Manager to download and install. Then...whenever you identify a programming problem first consult the C++ Standard Library and the Boost. Only if solution can't be found there continue your search elsewhere.

Because the C programming language played such a pivotal role in history of Unix and open source many libraries are written in it. It is quite common to find a pair - a high quality C library to fulfill some task and a C++ wrapper to provide more modern interface.

For example - you might want to implementing http protocol in C++/Unix environment by using the Neon library as underlying technology and the Neon++ wrapper as an actual interface.

Sometimes instead of adding two pluses to wrapper library names an extension mm or xx is used. For example for C library libgtk the C++ wrappers is called libgtkmm. libpango - libpangomm. libcairo - libcairomm. libpg - libpgxx. This convention makes it easier to search for a wrapper when finding a C library to perform a task.

If not too much of a compromise try to keep your code consistent with C++ Coding Convention by selecting libraries that only use combination of lowercase letters and underscores for all program identifiers (except for template parameters, which start with capital letter).

I would like to write a small unix business application.

In .NET I would divide my code it into three packages - user interface, business logic, and database abstracton layer.  User interface would be a simple form application which would host many user controls and take care for communication between them. Its responsibilities would include taking care for dataset flows and responding to events.

User interface would be a simple form application which would host many user controls and take care for communication between them. Its responsibilities would include taking care for dataset flows and responding to events.

Business logic would be further decomposed to client part and business part. Client part would provide user controls that would use facade objects for various business domains. These would communicate with server part of business logic via web service or COM+; using integrated role based security.

Web service would further call business logic dynamic link library. This would communicate with database abstraction layer library using only datasets. Database abstraction layer library would communicate with SQL Server stored procedures. And these would manipulate database tables.

The task for the weekend is figuring out how to do the same in Ubuntu using C++ with various libraries and Postgres?

Categories architectures , c++ , patterns , postgres , ubuntu

It was Rok Pintar who brought Linux to Slovenia. He published the very first article about it in the Programmer newspaper. And then one day in the early 90ties Rok was kind enough to copy 65 floppy discs for me so I could get to know it too. Coming from CP/M and DOS environments and using Windows 3.11 at the time; I was impressed. Technically Linux was far superior to Windows 3.11.

But frankly I did not know what to do with it. In addition first MSDN was published and many companies started seeking "nigl-nagl-neu" MFC or VB programmers. I was in my early twenties, proficient in Clipper, and eager to earn me some easy money. Or so I thought. So when Windows 95 came I decided to move to this exciting new platform.

Six months ago I got sick of the colorful Vista, the impossible new Microsoft Office user interface and the rest of the kitch. One weekend I decided to install Ubuntu Linux on my home machine to check its progress. And I still use it today.

In my opinion Ubuntu distribution is a solid, mature desktop system. It has everything an office workplace needs. It is not a complete Windows replacement because it lacks killer applications in specific areas, such as - Bloomberg in finance, AutoCAD in engineering, Adobe Premiere in movie post production, etc. But it is apropriate for most workplaces now.

Now it needs a push by its community. To attract new generation of techies we need publicly available knowledge in areas of IT system architecture and programming that is not available for this platform today.

For example, when I install Windows network there are books available to tell me about Windows AD domain as optimal solution for security. But when I install Ubuntu there are no sources to advice me about using NIS / NIS+, Kerberos and other solutions.

Googling these issues soon leads to information overkill. A newcomer is confused. He has all these technologies for creating small Ubuntu LAN available but yet he is not even able to create common network user names for workstation users and common folders.

Ubuntu programmers face similar troubles. Every child can pick a book and read about developing a three tiered business application for Windows using SQL Server and .NET. But there are no good resources about business application programming for Linux. While there is abundance of know-how about SQL Server, chained security,.NET, and web services; the situation is different for Ubuntu. We're pretty much on our own. It's easy to develop web service in .NET. There is only one way to do it. It's hard in Ubuntu and there are many different solutions; from creating your own server to using PHP.

Thus as I uncover the secrets of Unix business programming I will share them with you on this blog. I will write from the perspective of former C++/VB/.NET/SQL Server developer. I will develop a framework that will provide guidelines and best practices and enable rapid business application development to hobby programmers (like myself) and/or to professionals who might some day follow my path to the uncharted area of Ubuntu business application development.

At the end I promise to follow one very important rule. I will not publish "40 ways to establish a LAN domain in Linux" articles. I will only describe the best (and most Unix) way of doing it. And stick to it. This blog will be about solutions; not about choices.

If you are like me and are interested in developing for Unix, why don't you join me in writing this blog?